Generative design is a methodology that allows computers to aid in the design process by incorporating algorithms. Also known as Parametric design, best described here, algorithm-based methods (not always with computers) are used to balance the design intent with the outcome. It sounds complicated, but it makes things not only simpler for designers but also more efficient in the long run. A great couple of examples are highlighted here in both illustrating how generative design shows the most mathematical way for everyone to get a good view in a sports stadium or the most efficient way to have parking lines drawn in a lot. It also produces some of the most beautiful and organic designs seen in modern-day architecture, and like everything else in the world, it’s found its way into the metaverse.

We recently sat down with Far, one of the leaders in the Web3 architecture space, to talk about his new project, SOLIDS, and how architecture IRL is being highly influenced by the technology behind it.

Who are you, and what’s your background in architecture and Web3?

I’m Far, an artist and engineer interested in where visual arts and technology meet. My background is in Engineering, Design, and Art. I’ve studied Civil Engineering, Architecture, and Fine Art, and currently, I’m working on a Ph.D. in visual studies, where I focus on digital images and visual culture.

When I look at digital images, I am interested in dissecting them on different levels: historically and conceptually. But what I am really fascinated by is by resolving how were they made. Out of curiosity, I got in crypto back in 2015, and never looked back. When Ethereum was launched, I was flirting with the possibility of fractionalizing artworks and ran some experiments with it. Simultaneously I was part of the Decentralized Autonomous Kunstverein (DAK), one of the first artists-ran DAOs, which was part of the phenomenal exhibition "Proof-of-Work” at Schinkel Pavillon in 2018. Last summer, I started Infinites AI.

How has the software behind architecture played a role in how we see buildings?

I was taught architecture by the first generation of architects that started to use animation software to create architecture, as opposed to more conventional CAD (computer assisted design) tools. This generation of architects met at Columbia University in the late ‘90s, early 2000s, and was guided by older architects such as Peter Eisenmann. This young generation of architects started to give more autonomy to software to dictate the architectural forms, and this was when digital architecture started to penetrate deeper within schools.

In parallel, more senior architects such as Zaha Hadid, Frank Gehry, and Thom Mayne already had large practices and started to incorporate into their teams these young architects along with their tools. This was when you started to see a big shift in the built environment where software had a big impact.

Generative Architecture has already had a season of coming to age as an important movement in the architecture field for the past decades, with some people like Patrick Schumacher and Zaha Hadid have claimed it to be the new dominant style and the biggest movement after Modernism. In a more plain language, what we have seen in the past decades in architecture is the impact of software and algorithms on form, and it’s pretty simple. When you draw a line on the drafting board, it’s not the same as when you do it on the computer. In both cases, the influence of the tools is important. On the paper, if you do a curve, you use the compass; on the computer, you might use a spline. They seemingly are the same, but they are not. So that can be extrapolated to buildings and cities.

You can play a game, like I do, which is to guess which software has been used to design any building you visit. Some might be on Rhinoceros, others in Maya, Revit, and other notorious ones in CAIT, which is software that Frank Gehry used to design the notorious Guggenheim in Bilbao. Nowadays, a lot of buildings are created using software that specifically was made for this purpose, like Autodesk Revit. This is why there is somewhat homogeneity in the skylines of cities when you look at the newly erected buildings. They all use the same libraries and tools within the same program.

Here is when it gets exciting. What if the software used to create the building was actually made to create VFX in films? That’s the case with a lot of interesting architecture in the ‘90s when they started to use Maya to design buildings. What was used to design dinosaurs for Jurassic Park was being used to design buildings at Columbia University. This completely changed the game of designing the buildings and giving the software agency by letting its smooth surfaces morph into shapes and by leveraging the timeline. After all, this software is made to make animations for Hollywood films.

How does SOLIDS fit in all of this?

Architects plan their buildings in the virtual realm. Before ever taking into consideration if a building or even a city is going to be erected, they exist in a virtual environment. In a way, one could make the argument that the built environment was once in the metaverse, or at least born in the metaverse.

Virtual environments became more tangible with the incorporation of blockchain, making the idea of the metaverse a material extension of the real world. As more metaverses emerge, there is a real need to shape them, either with the design of the land, organization of virtual cities, or the mere fact of creating space. This is where architecture is needed. That, combined with the nature of the profession, which, in a way, is metaverse ready, opens up a completely new ecosystem that benefits everyone.

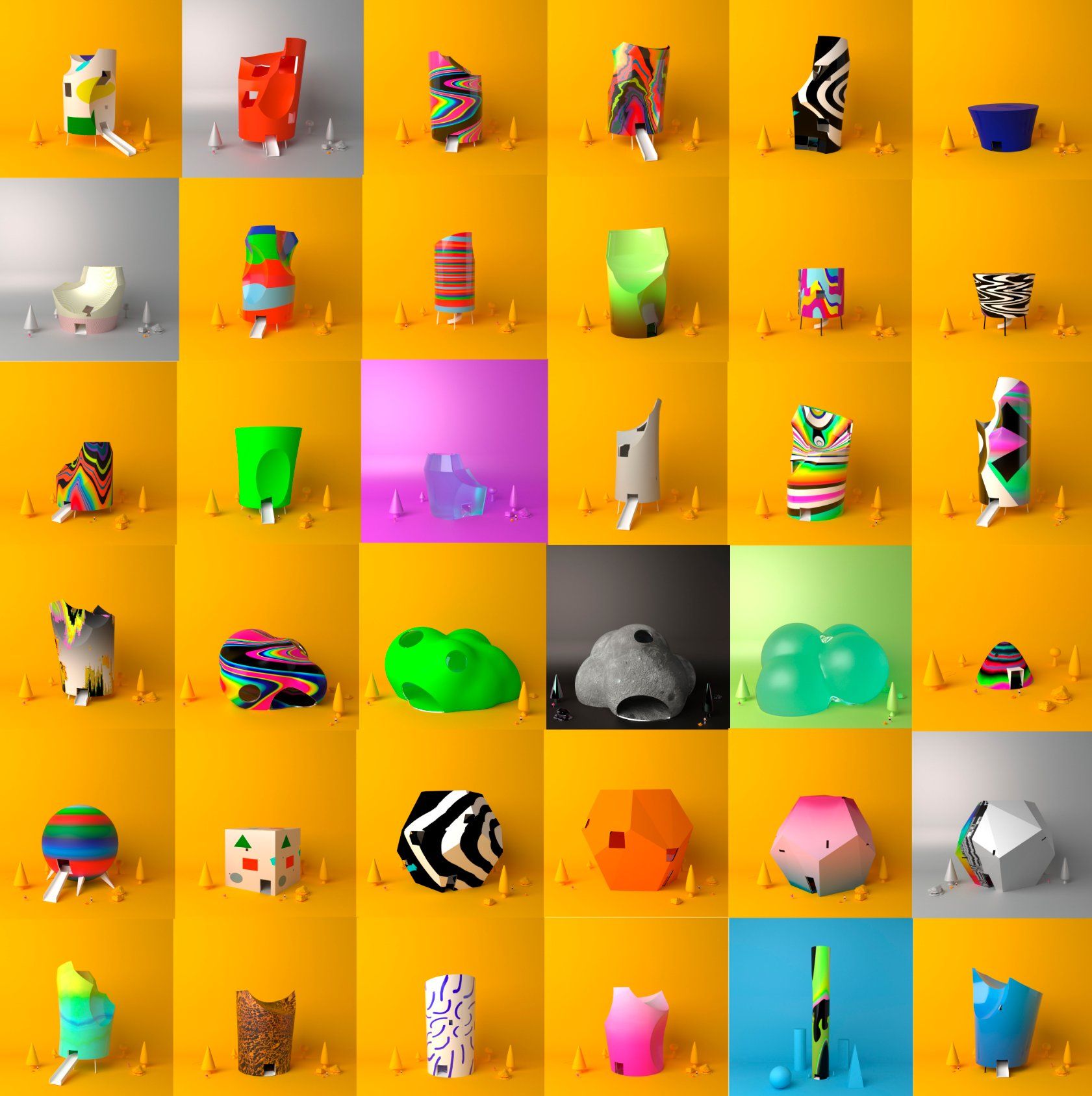

This is where SOLIDS comes in as a proposal for a standard for architecture to populate in any metaverse. SOLIDS comes from generative art, coalescing with generative architecture. On the one hand, we created over a dozen scripts to generate different archetypes in 3D, and, separately, a set of different techniques to create 2D images, some using scripting tools and others just digital paintings. The 2D images serve as skins to wrap the 3D objects creating a very versatile workflow able to create distinct, unique buildings that vary in size, shape, ways of entry, openings, and so on.

Will SOLIDS work in all the metaverses?

Each model is provided to the user as FBX and GLB for now, so they can be implemented in any other 3D model software, web-based applications, or game engines. Thus, they can be imported into the metaverse. Depending on each metaverse, there are specific specs to set up collisions and poly-count limitations. This is where Solids will be adapting as new metaverses emerge. As for now, all SOLIDS work in metaverses that accept high poly. We are working at the moment to adapt the models for Decentraland and other web-based metaverses.

How much human influence was behind these designs, and how much was from an algorithm?

The design of the SOLIDS was a back and forth between the algos and us, the designers. We would set up ranges for outputs, and adjust them, but the SOLIDS are fully generated by an algorithm.

What’s your ultimate dream with SOLIDS?

Two dreams. The first is that generative architecture is a new field within the NFT space, as generative art is providing a new layer to enrich the metaverse. This would make the space way more interesting, adding more depth.

Second, seeing SOLIDS as portals between metaverses. Being able to walk in between them using the SOLID as the link. SOLIDS will be the space to bring people together in Web3. We are actually exploring launching a social media dapp based on them.